Edition 92

Using AI-based Object Detection For Finding Elements

It has been possible for some time to use AI to find elements in Appium, via the collaboration between Test.ai and the Appium team. We work together to maintain the test-ai-classifier project, and in this edition of Appium Pro we'll take a look at an advanced element finding mode available in v3 of the plugin.

A tale of two modes

The default mode for the plugin is what I call "element lookup" mode. Let's say we're trying to find an icon that looks like a clock on the screen. The way this mode works is that Appium finds every leaf-node element in the view, generates an image of how it appears on screen, and then checks to see whether that image looks like a clock. If so, then the plugin will remember the element that was associated with that image, and return it as the result of the call to findElement.

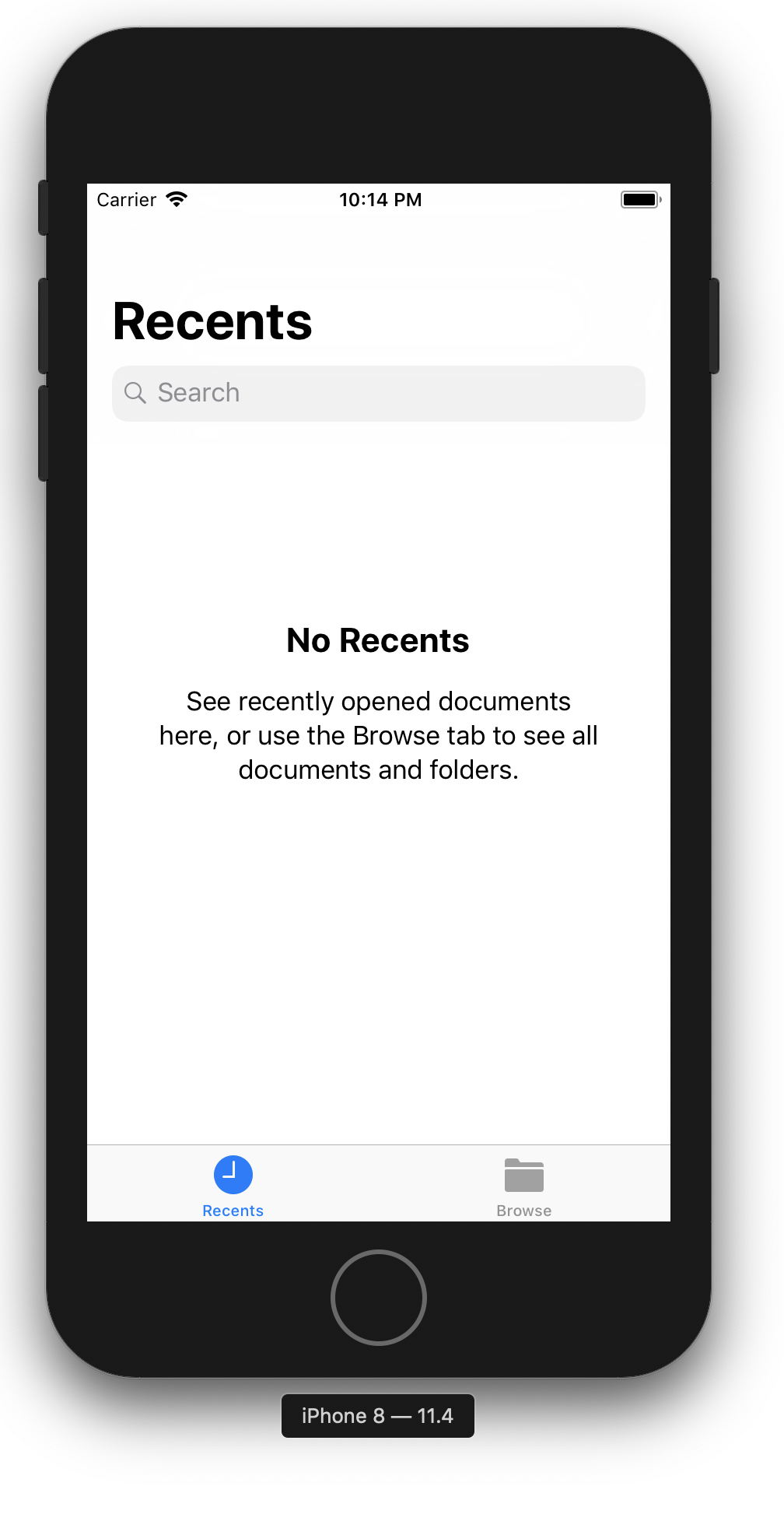

This mode works great for finding many icons, and is pretty speedy on top of that. However, it can run into some big problems! Let's say we're working with the Files app on iOS:

Now let's say we want to find and tap on the "Recents" button at the bottom of the screen. There's an icon that looks like a clock, so we might be tempted to try and find the element using that label:

driver.findElement(MobileBy.custom("ai:clock")).click();

This might work depending on the confidence level set, but it will probably fail. Why would it fail? The issue is that, on iOS especially, the clock icon is not its own element! It's grouped together with the accompanying text into one element, like this:

![]()

When a screenshot is taken of this element, and it's fed into the classifier model, the arbitrary text that's included as part of the image will potentially confuse the model and lead to a bad result.

Object detection mode

Version 3.0 of the classifier plugin introduced a second element finding mode to deal with this kind of problem. Because there are now two modes, we need a way to tell the plugin which one we want to use. We do that with the testaiFindMode capability, which can take two values:

element_lookup(the default)object_detection

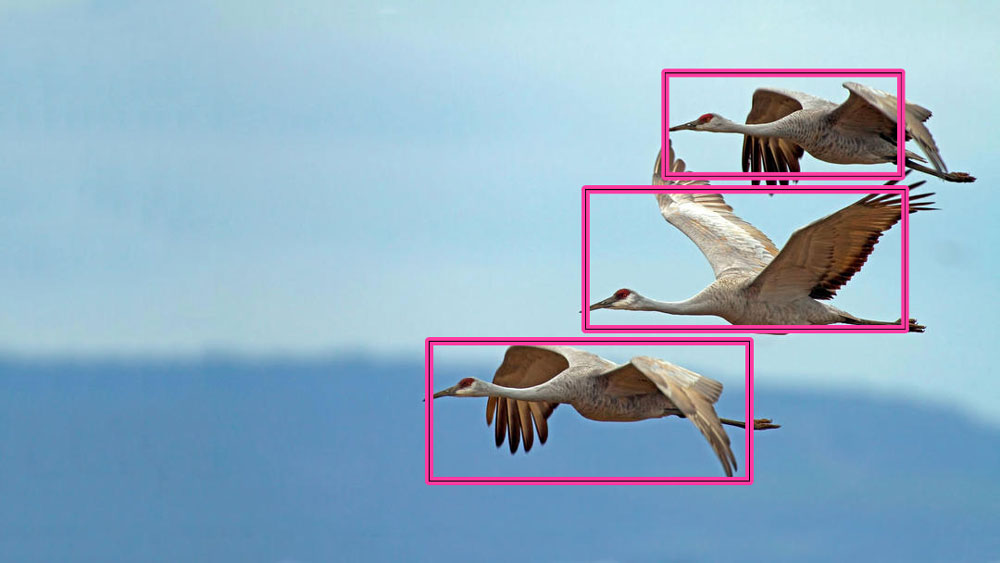

What is object detection, and how does it help? Object detection is a feature of many image-based machine learning models, which aims to take an input image and generate as its output a list of regions within that image that count as distinct objects. The image of birds with boxes around them up top is an example of what an object detection algorithm or machine learning model can achieve. Carving up the world into separate objects is something our human brains do quite naturally, but it's a fairly complex thing to figure out how to train a computer to do it!

Once objects have been detected in an image, they can optionally be classified. The object detection mode of the plugin does exactly this: it takes a screenshot of the entire screen, runs this entire screenshot through an object detection model, and then cuts up screen regions based on objects detected by the model. Those cut-up images (hopefully representing meaningful objects) are then sent through the same classifier as in element lookup mode, so we can see if any of them match the type of icon that we're looking for.

The main advantage of object detection mode is that the plugin will look at the screen the way a human would--if you ask it to find a clock, it won't matter if there's a unique 'clock' element all by itself somewhere on the screen or not. This is also the main disadvantage, because when using this mode, we are not finding actual elements. We're just finding locations on the screen where we believe an element to be, i.e., image elements. This is totally fine if we just want to tap something, but if we're trying to find an input box or other type of control to work with using something like sendKeys, we're out of luck.

Example

By way of example, I've written up a simple test case that attempts to tap on the "Recents" button in the Files app on iOS, using object detection. It's pretty slow (the object detection model takes about 30 seconds to run on my machine), so it's really only useful in situations where absolutely nothing else works at this point! I'm very grateful to Test.ai for contributing the object detection model which is downloaded in v3 of the plugin.

import io.appium.java_client.MobileBy;

import io.appium.java_client.MobileElement;

import io.appium.java_client.Setting;

import io.appium.java_client.ios.IOSDriver;

import java.io.IOException;

import java.net.URL;

import java.util.HashMap;

import org.junit.After;

import org.junit.Before;

import org.junit.Test;

import org.openqa.selenium.By;

import org.openqa.selenium.remote.DesiredCapabilities;

import org.openqa.selenium.support.ui.ExpectedConditions;

import org.openqa.selenium.support.ui.WebDriverWait;

public class Edition092_AI_Object_Detection {

private String BUNDLE_ID = "com.apple.DocumentsApp";

private IOSDriver driver;

private WebDriverWait wait;

private By recents = MobileBy.custom("ai:clock");

private By browse = MobileBy.AccessibilityId("Browse");

private By noRecents = MobileBy.AccessibilityId("No Recents");

@Before

public void setUp() throws IOException {

DesiredCapabilities caps = new DesiredCapabilities();

caps.setCapability("platformName", "iOS");

caps.setCapability("automationName", "XCUITest");

caps.setCapability("platformVersion", "11.4");

caps.setCapability("deviceName", "iPhone 8");

caps.setCapability("bundleId", BUNDLE_ID);

HashMap<String, String> customFindModules = new HashMap<>();

customFindModules.put("ai", "test-ai-classifier");

caps.setCapability("customFindModules", customFindModules);

caps.setCapability("testaiFindMode", "object_detection");

caps.setCapability("testaiObjectDetectionThreshold", "0.9");

caps.setCapability("shouldUseCompactResponses", false);

driver = new IOSDriver<MobileElement>(new URL("http://localhost:4723/wd/hub"), caps);

wait = new WebDriverWait(driver, 10);

}

@After

public void tearDown() {

try {

driver.quit();

} catch (Exception ign) {}

}

@Test

public void testFindElementUsingAI() {

driver.setSetting(Setting.CHECK_IMAGE_ELEMENT_STALENESS, false);

// click the "Browse" button to navigate away from Recents

wait.until(ExpectedConditions.presenceOfElementLocated(browse)).click();

// now find and click the recents button by the fact that it looks like a clock

driver.findElement(recents).click();

// prove that the click was successful by locating the 'No Recents' text

wait.until(ExpectedConditions.presenceOfElementLocated(noRecents));

}

}

(Of course, you can also check out the sample code on GitHub)