Edition 86

Connecting Directly to Appium Hosts in Distributed Environments

This edition of Appium Pro is in many ways the sequel to the earlier article on how to batch Appium commands together using Execute Driver Script. In that article, we saw one way of getting around network latency, by combining many Appium commands into one network request to the Appium server.

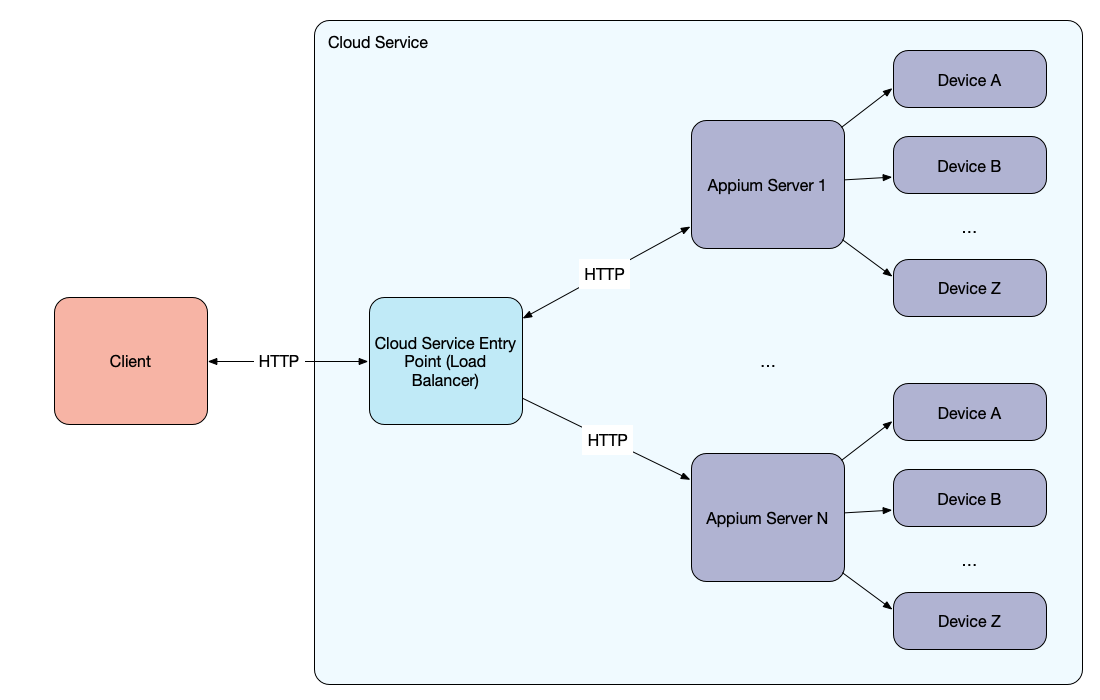

When using a cloud service, however, there might be other network-related issues to worry about. Many cloud services adopt the standard Webdriver/Appium client/server model for running Appium tests. But because they host hundreds or thousands of devices, they'll be running a very high number of Appium servers. To reduce complexity for their users, they often provide a single entry point for starting sessions. The users' requests all come to this single entry point, and they are proxied on to the appropriate Appium server based on the user's authentication details and the session ID. In these scenarios, the single entry point acts as a kind of Appium load balancer, as in the diagram below:

This model is great for making it easy for users to connect to the service. But it's not necessarily so great from a test performance perspective, because it puts an additional HTTP request/response in between your test client and the Appium server which is ultimately handling your client's commands. How big of a deal this is depends on the physical arrangement of the cloud service. Some clouds keep their load balancers and devices all together within one physical datacenter. In that case, the extra HTTP call is not expensive, because it's local to a datacenter. Other cloud providers emphasize geographical and network distribution, with real devices on real networks scattered all over the world. That latter scenario implies Appium servers also scattered around the world (since Appium servers must be running on hosts physically connected to devices). So, if you want both the convenience of a single Appium endpoint for your test script plus the benefit of a highly distributed device cloud, you'll be paying for it with a bunch of extra latency.

Well, the Appium team really doesn't like unnecessary latency, so we thought of a way to fix this little problem, in the form of what we call direct connect capabilities. Whenever an Appium server finishes starting up a session, it sends a response back to your Appium client, with a JSON object containing the capabilities the server provides (usually it's just a copy of whatever capabilities you sent in with your session request). If a cloud service implements direct connect, it will add four new capabilities to that list:

directConnectProtocoldirectConnectHostdirectConnectPortdirectConnectPath

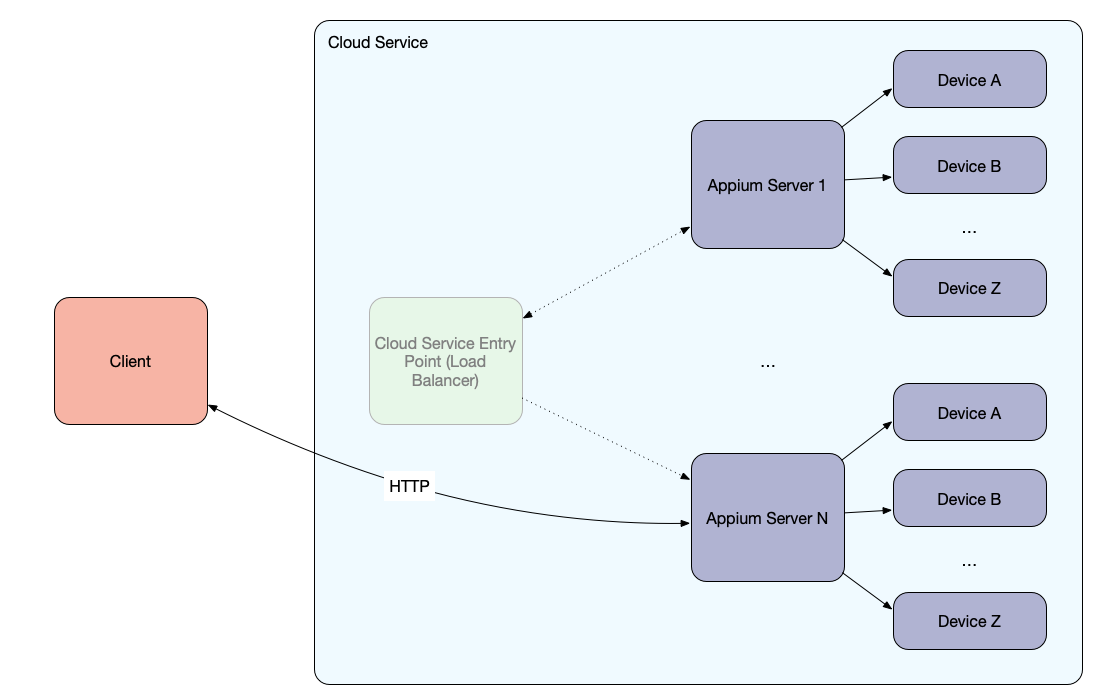

These capabilities will encode the location and access information for a non-intermediary Appium server--the one actually handling your test. Now, your client had connected to the Appium load balancer, so it doesn't know anything about the host and port of the non-intermediary Appium server. But these capabilities give your client that information, and if your client also supports direct connect, it will parse these capabilities automatically, and ensure that each subsequent command gets sent not to the load balancer but directly to the Appium server which is handling your session. At this point in time, the official Appium Ruby and Python libraries support direct connect, as well as WebdriverIO--support for other clients coming soon.

It's essentially what's depicted in the diagram below, where for every command after the session initialization, HTTP requests are made directly to the final Appium server, not to the load balancer:

The most beautiful thing about this whole feature is that you don't even need to know about direct connect for it to work! It's a passive client feature that will work as long as the Appium cloud service you use has implemented it on their end as well. And, because it's a new feature all around, you may have to turn on a flag in your client to signal that you want to use this feature if available. (For example, in WebdriverIO, you'll need to add the enableDirectConnect option to your WebdriverIO config file or object.) But beyond this, it's all automatic!

The only other thing you might need to worry about is your corporate firewall--if your security team has allowed connections explicitly to the load balancer through the firewall, but not to other hosts, then you may run into issues with commands being blocked by your firewall. In that case, either have your security team update the firewall rules, or turn off direct connect so your commands don't fail.

Direct Connect In Action

To figure out the actual, practical benefit of direct connect, I again engaged in some experimentation using HeadSpin's device cloud (HeadSpin helped with implementing direct connect, and their cloud currently supports it).

Here's what I found when, from my office in Vancouver, I ran a bunch of tests, with a bunch of commands, with and without direct connect, on devices sitting in California and Japan (in all cases, the load balancer was also located in California):

| Devices | Using Direct Connect? | Avg Test Time | Avg Command Time | Avg Speedup |

|---|---|---|---|---|

| Cali | No | 72.53s | 0.81s | |

| Cali | Yes | 71.62 | 0.80s | 1.2% |

| Japan | No | 102.03s | 1.13s | |

| Japan | Yes | 70.83s | 0.79s | 30.6% |

Analysis

What we see here is that, for tests I ran on devices in California, direct connect added only marginal benefit. It did add a marginal benefit with no downside, so it's still a nice little bump, but because Vancouver and California are pretty close, and because the load balancer was geographically quite close to the remote devices, we're not gaining very much.

Looking at the effects when the devices (and therefore Appium server) are located much further away, we see that direct connect provides a very significant speedup of about 30%. This is because, without direct connect, each command must travel from Vancouver to California and then on to Japan. With direct connect, we not only cut out the middleman in California, but we also avoid constructing another whole HTTP request along the way.

Test Methodology

(The way I ran these tests was essentially the same as the way I ran tests for the article on Execute Driver Script)

- These tests were run on real Android devices hosted by HeadSpin around the world on real networks, in Mountain View, CA and Tokyo, Japan.

- For each test condition (location and use of direct connect), 15 distinct tests were run.

- Each test consisted of a login and logout flow repeated 5 times.

- The total number of Appium commands, not counting session start and quit, was 90 per test, meaning 1,350 overall for each test condition.

- The numbers in the table discard session start and quit time, counting only in-session test time (this means of course that if your tests consist primarily of session start time and contain very few commands, then you will get a proportionally small benefit from optimizing using this new feature).

Conclusion

You may not find yourself in a position where you need to use direct connect, but if you're a regular user of an Appium cloud provider, make sure to check in with them to ask whether they support the feature and whether your test situation might benefit from the use of it. Because the feature needs to be implemented in the load balancer itself, it's not something that you can take advantage of by using open source Appium directly (although, it would be great if someone built support for direct connect as a Selenium Grid plugin!) Still, as use of devices located around the world becomes more common, I'm happy that we have at least a partial solution for eliminating any unnecessary latency.