Edition 70

Capturing Audio Output During Testing: Part 2

Previously, we looked at how to capture audio playback during testing. Now, it's time to look at how to verify that the audio matches our expectations! The very fact that we have expectations needs to be expressed in audio form. In other words, what we want to do is take the audio we've captured from a particular test run, and assert that it is in some way similar to another audio file that we already have available. We can call this latter audio file the "baseline" or "gold standard", against which we will be running our tests.

Context

In the first part, the audio we care about verifying is a sound snippet from one of my band's old songs. So what we need to do is save a snippet we know to be "good", so that future versions of the tests can be compared against this. I've gone ahead and copied such a snippet into the resources directory of the Appium Pro project. The state of our test as we left it in the previous part was that we had captured audio from an Android emulator, and asserted the audio had been saved, but had not done anything with it. Here's where we starting out from now, with our new test class that inherits from the old test class:

public class Edition070_Audio_Verification extends Edition069_Audio_Capture {

private File getReferenceAudio() throws URISyntaxException {

URL refImgUrl = getClass().getClassLoader().getResource("Edition070_Reference.wav");

return Paths.get(refImgUrl.toURI()).toFile();

}

@Test

@Override

public void testAudioCapture() throws Exception {

WebDriverWait wait = new WebDriverWait(driver, 10);

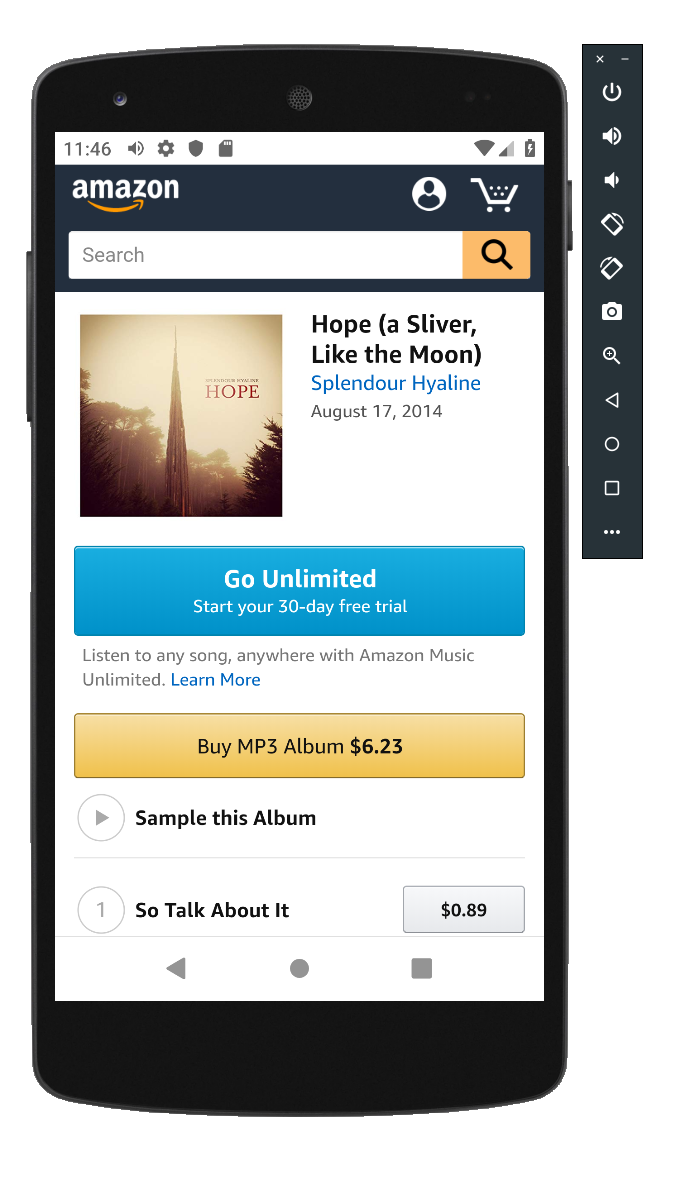

// navigate to band homepage

driver.get("http://www.splendourhyaline.com");

// click the amazon store icon for the first album

WebElement store = wait.until(ExpectedConditions.presenceOfElementLocated(

By.cssSelector("img[src='/img/store-amazon.png']")

));

driver.executeScript("window.scrollBy(0, 100);");

store.click();

// start playing a sample of the first track

WebElement play = wait.until(ExpectedConditions.presenceOfElementLocated(

By.xpath("//div[@data-track-number='2']//div[@data-action='dm-playable']")

));

driver.executeScript("window.scrollBy(0, 150);");

// start the song sample

play.click();

// start an ffmpeg audio capture of system audio. Replace with a path and device id

// appropriate for your system (list devices with `ffmpeg -f avfoundation -list_devices true -i ""`

File audioCapture = new File("/Users/jlipps/Desktop/capture.wav");

captureForDuration(audioCapture, 10000);

}

}

What we now need to do is assert that our audioCapture File in some sense matches our gold standard. But how on earth would we do that?

Audio file similarity

As a naive approach, we could assume that similar wav files might be similar on a byte level. We could try to use something like an MD5 hash of the file and compare it with our gold standard. This, however, will not work. Unless the WAV files are exactly the same, the MD5 hash will likely be completely unrelated. We could get slightly more complicated, and actually read the WAV file as a stream of bytes, and compare each byte of our captured WAV with the baseline WAV. This approach, unfortunately, is also doomed to fail! Tiny differences in sound would lead to huge differences on a byte level. Also, if the timing of the two WAV files differs by anything more than the sample rate (which is many thousands of times per second), every single byte will be different and our comparison will be utter garbage.

What we will do instead is take advantage of work that has been done in the world of audio fingerprinting. Fingerprinting is what lies behind services like Shazam or last.fm that can detect what song is being played even though it might be recorded through your phone's microphone. Fingerprinting is a complex algorithm that takes into account various acoustic properties of WAV file segments, and produces what is essentially a hash of a piece of audio. The important thing is that similar audio files will produce more similar hashes, so they can actually be fruitfully compared with one another.

Chromaprint

The fingerprinting library we will use is called Chromaprint, and you will need to download the appropriate version for your system. Just like with ffmpeg, we will run the Chromaprint binary as a Java subprocess. The way we'd run it outside of Java, on the command line, would be like this:

# in the chromaprint directory

./fpcalc -raw /path/to/audio.wav

This will produce output that corresponds to the fingerprint of the audio file. Using the -raw flag means we get the raw numeric output rather than the base64-encoded output (which is nice and small, but makes the comparisons between fingerprints less strong). Running from the terminal, the output will look something like:

DURATION=9

FINGERPRINT=1663902633,1696875689,1709563129,1688656920,1688648712,1688644617,1688708873,1692705291,1684312587,1814528570,1832305258,1863766762,3993379306,3993380074,3997640938,3998550234,4275505354,4271783130,4137558234,1988825290,1451753930,1586000842,1586029546,3599411178,3603677163,3595079099,3590884507,1444650121,1448785096,1985647688,1985647640,1985581096,1983422504,1983438904,2000576584,1949176009,1949172170,1950220986,1954481834,1958288106,1958292202,1958247034,1957227082,1957211979,2011735113,1982377992,2116780040,2117763128,1041928232,1041948712,907960360,907878456,1728520216,1812338040,1812336376,1818103480

But we want to run this from Java, so we need a handy class that encapsulates all this fingerprinting business, including running Chromaprint's fpcalc binary. It will also be responsible for parsing the response and storing it in a way that makes comparison easy:

class AudioFingerprint {

private static String FPCALC = "/Users/jlipps/Desktop/chromaprint/fpcalc";

private String fingerprint;

AudioFingerprint(String fingerprint) {

this.fingerprint = fingerprint;

}

public String getFingerprint() { return fingerprint; }

public double compare(AudioFingerprint other) {

return FuzzySearch.partialRatio(this.getFingerprint(), other.getFingerprint());

}

public static AudioFingerprint calcFP(File wavFile) throws Exception {

String output = new ProcessExecutor()

.command(FPCALC, "-raw", wavFile.getAbsolutePath())

.readOutput(true).execute()

.outputUTF8();

Pattern fpPattern = Pattern.compile("^FINGERPRINT=(.+)$", Pattern.MULTILINE);

Matcher fpMatcher = fpPattern.matcher(output);

String fingerprint = null;

if (fpMatcher.find()) {

fingerprint = fpMatcher.group(1);

}

if (fingerprint == null) {

throw new Exception("Could not get fingerprint via Chromaprint fpcalc");

}

return new AudioFingerprint(fingerprint);

}

}

Basically, what's going on here is that we are setting a path to the fpcalc binary, and then using the ProcessExecutor Java library (from the good folks at ZeroTurnaround to make executing fpcalc very easy. We then use regular expression matching on the output to extract a fingerprint from an audio file. Most of the code here is simply Java class boilerplate and regular expression logic!

Comparing fingerprints

The most important bit is the compare method, where we are making use of something called the Levenshtein distance between strings to figure out how similar to audio fingerprints really are. To this end I'm using a library called JavaWuzzy (a port of the useful Python library FuzzyWuzzy), which contains the important algorithms so I don't need to worry about implementing them. The response of my call to the partialRatio method is a number between 0 and 100, where 100 is a perfect match and 0 signifies no matching segments at all.

All we need to do then, is hook this class up into our test so that we can fingerprint both our newly-captured audio as well as the baseline audio, and then run the comparison. In my experiments, I was able to achieve a value of about 75 for a correct comparison, whereas other song snippets came in at an appropriately lower value, say 45. Of course you'll want to determine through experimentation what your similarity threshold should be, based on the particular audio domain, clip length, etc...

Hooking in the new code is relatively easy (starting from the point in the test method where we have the audioCapture file populated with the new audio:

// now we calculate the fingerprint of the freshly-captured audio...

AudioFingerprint fp1 = AudioFingerprint.calcFP(audioCapture);

// as well as the fingerprint of our baseline audio...

AudioFingerprint fp2 = AudioFingerprint.calcFP(getReferenceAudio());

// and compare the two

double comparison = fp1.compare(fp2);

// finally, we assert that the comparison is sufficiently strong

Assert.assertThat(comparison, Matchers.greaterThanOrEqualTo(70.0));

Here, I've added a helper method called getReferenceAudio() to get me the baseline audio File object from the resources directory. And notice the assertion in the final line, which turns this bit of automation into a bona fide test of audio similarity!

So, when all is said and done, it is possible to test audio with Appium and Java (and since we are using ffmpeg and Chromaprint as subprocesses, the same technique can be used in any other programming language as well). This is relatively unexplored territory, though, so I would expect there to be a certain amount of potential flakiness for this kind of testing. That being said, the Chromaprint fingerprinting algorithm is used commercially and appears to be quite good, so at the end of the day the quality of the test will depend on the quality, length, and genre of your audio. Please do let me know if you put this into practice as I'd love to hear any case studies of this technique. And don't forget to check out the full code sample on GitHub, to see everything in context. Happy testing, and happy listening! Oh, and in case you really wanted to know: yes, my band will be coming out with a new studio album very soon!