Edition 105

Paid Tools And Services For Mobile App Performance Testing

So far in this series, we've looked at mobile performance testing in general, as well as free tools for mobile performance testing. Now it's time to discuss paid tools. Obviously, Appium is free and open source, and it's nice to focus on and promote free tools. But at the end of the day, sometimes paid tools and services can be valuable, especially in a business setting where the budget is less important than the end result. I want to make sure you have some decent and reliable information about the whole ecosystem, which includes paid tools, so will from time to time let you know my thoughts on this as well!

Disclaimers: This is not intended to be completely comprehensive, and even for the services I cover, I will not attempt to give a full overview of all their features. The point here is to drill down specifically into performance testing options. Some services might not be very complete when it comes to their performance testing offering, but that doesn't mean they don't have their strong points in other areas. For each tool, I'll also note whether I've personally used it (and am therefore speaking from experience) or whether I'm merely using their publicly-available marketing information or docs as research. Finally, I'll note for transparency that HeadSpin is an Appium Pro sponsor, and in the past I worked for Sauce Labs.

Appium Cloud Services with Performance Features

Most interesting for us as Appium users will be services that allow the collection of performance data at the same time as you run your Appium tests. This is in my opinion the 'holy grail' of performance testing--when you don't have to do anything in particular to get your performance test data! In my research I found four companies that in one way or another touch on some of the performance testing topics we've discussed as part of their Appium-related products.

HeadSpin

I've used this service

HeadSpin is the most performance-focused of all the service providers I've seen. Indeed, they began life with performance testing as their sole mission, so this is not surprising. Along the way, they added support for Appium and have been heavy contributors to Appium itself in recent years. HeadSpin supports real iOS and Android devices in various geographical locations around the world, connected to a variety of networks.

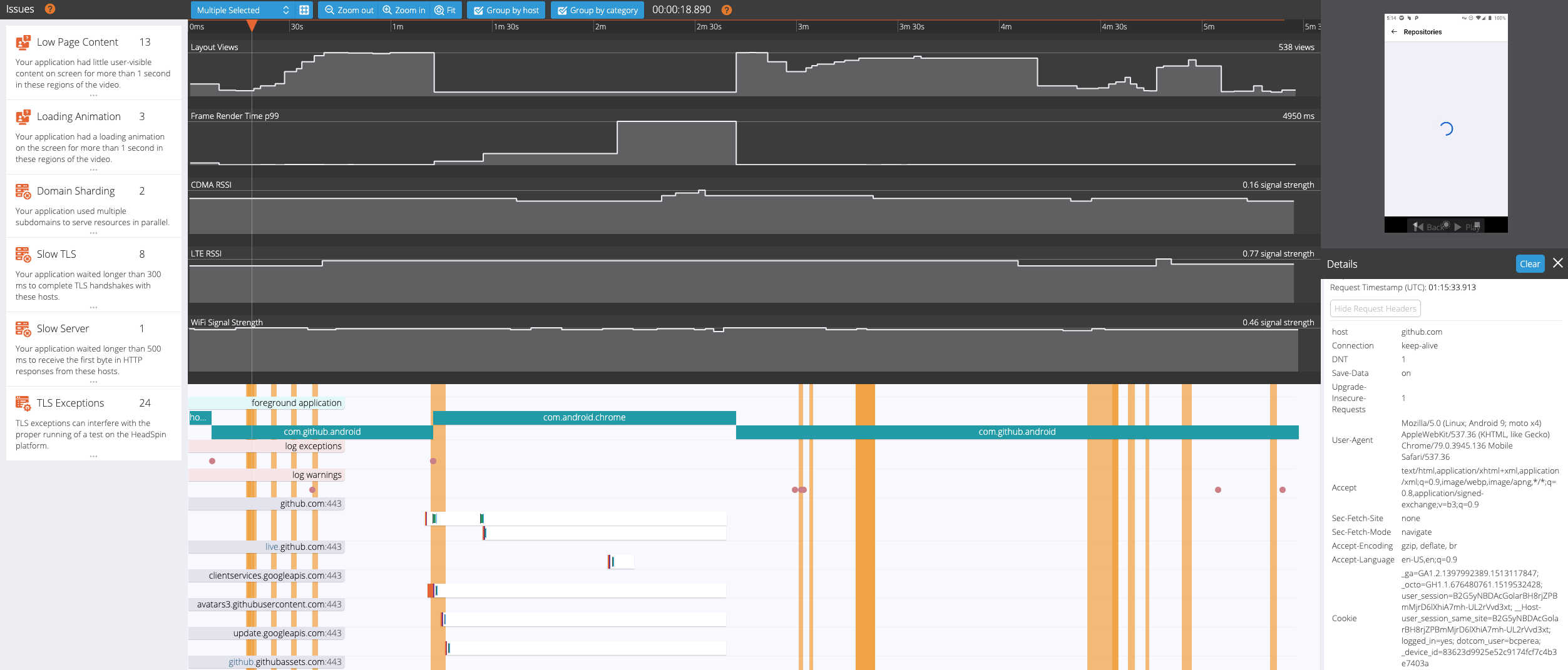

When you run any Appium test on HeadSpin, you get access to a report that looks something like the one here. Basically, HeadSpin tracks a ton of different metrics in what they call a performance session report. The linked doc highlights some of the metrics captured:

network traffic (in HAR and PCAP format), device metrics including CPU, memory, battery, signal strength, screen rotation, frame rate, and others, device logs, client-side method profiling, and contextual information such as Appium commands issued during the test, what app was in the foreground, and other contextual metadata.

On top of this, they try to show warnings or make suggestions based on industry-wide targets for various metrics. And this is all without you have to instrument your app or make specific commands to retrieve specific metrics. HeadSpin covers the most metrics out of any product or company I'm familiar with, including really difficult-to-measure quantities like the MOS (mean opinion score) of a video as a proxy for video quality.

One other feature useful for performance testing is what they call the 'HeadSpin bridge', which allows bridging remote devices to your local machine via their hs CLI tool. Concretely, this gives you the ability to run adb on the remote device as if it were local, so any Android-related performance testing tools that work on a local device will also work on their cloud devices, for example the CPU Profiler. The bridge works similarly for iOS, allowing step debugging or Instruments profiling on remote devices, as if they were local.

In terms of network conditioning, HeadSpin offers a number of options that can be set on a per-test basis, including network shaping, DNS spoofing, header injection (which is super useful for tracing network calls all the way through backend infrastructure), etc... This is in addition to requesting devices that exist in certain geographical regions.

Finally, it's worth pointing out that HeadSpin tries to make it easy for teams to use the performance data captured on their service by spinning up a custom "Replica DB" for each team, where performance data is automatically written. This "open data" approach means it's easy to build custom dashboards, etc..., on top of the data without having to pre-process it or host it yourself.

Sauce Labs

I've used this service

Historically, Sauce Labs was focused primarily on cross-browser web testing, and so it's no surprise they have some performance-related information available about their web browser sessions. That's not what we're considering today, however, but rather Sauce's mobile offering. They have a virtual device cloud of iOS simulators and emulators, but as we mentioned before, performance testing of virtual devices is not particularly meaningful.

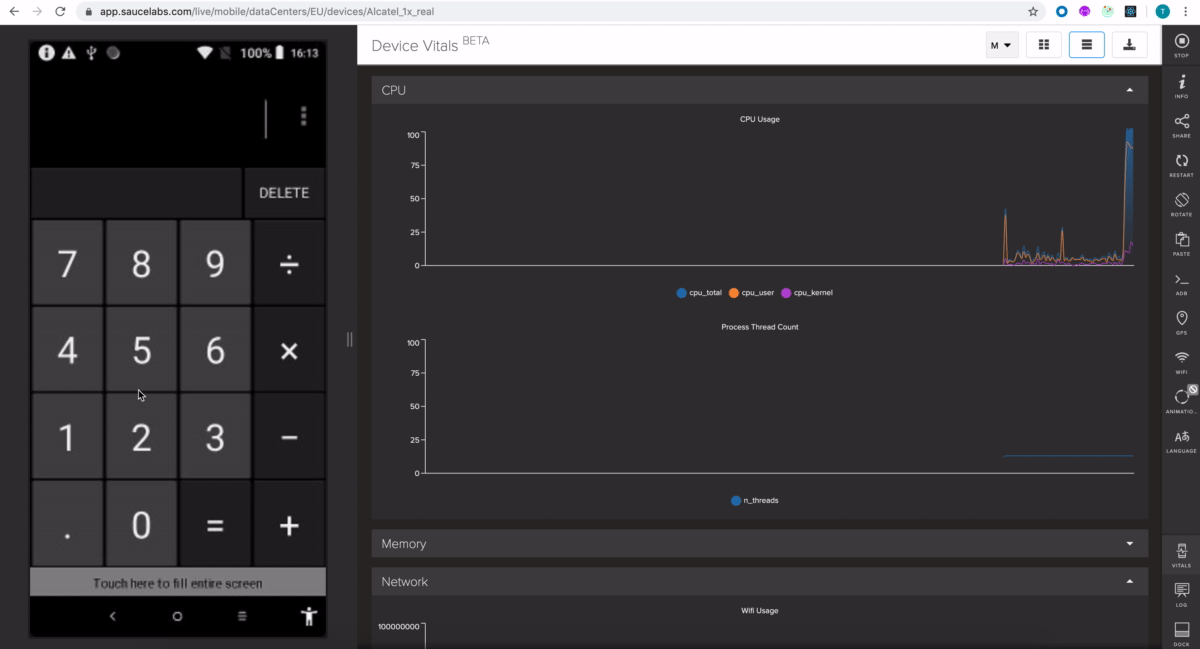

Sauce's real device cloud supports a feature called Device Vitals which provides a broad set of performance metrics, including CPU, memory, and performance information. It works with live/manual testing, but is also supported via their Appium interface when a special capability recordDeviceVitals is set. At this stage of the product it appears the only output for Appium tests is a CSV file which can be downloaded, meaning the user would be responsible for extracting and displaying the performance data itself. However, the live/manual usage shows a graph of performance data in real time:

Sauce also supports a virtual device bridge, which they call Virtual USB. It is generally available for Android and in beta for iOS, and works more or less the same as HeadSpin's bridge feature.

In my experience, and as far as I can tell, Sauce does not give users the ability to specify network conditions for device connections, so testing app performance in a variety of lab conditions is not possible. However, it is possible to direct Sauce devices to use a proxy connection, including something like Charles Proxy (see below).

AWS Device Farm

I've used this service

AWS has its own Appium cloud, which works on a bit of a different model than HeadSpin or Sauce. With AWS, you compile your whole testsuite into a bundle and send it off to Device Farm, which then unpacks it and uses it to run tests locally. Theoretically, this gives you a lot of flexibility about how you can connect to a device during the test, and it's probably possible (though I haven't tested this) to run some of your own performance testing diagnostics on the device under test, even as your Appium script is going through its paces.

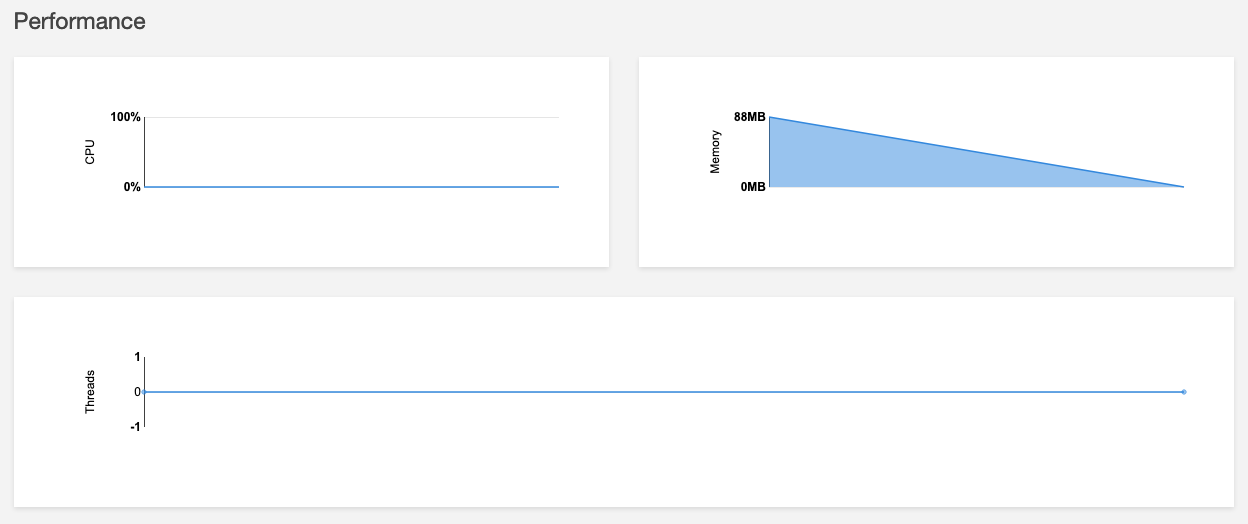

Luckily, you may not need to worry about this because Device Farm does capture some performance information as your test is executing, namely CPU, memory usage, and thread count over time:

Device Farm does allow you to simulate specific network conditions by creating a 'network profile' (that acts basically the same as the profiles we discussed in the article on simulating network conditions for virtual devices

Experitest

Information retrieved from company website

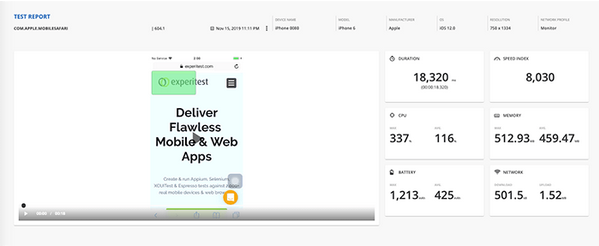

Experitest is a mobile testing company with Appium support, who also advertise performance data collection. They say they support different network conditions and geographical locations for their devices, as well as performance metrics like:

- Transaction duration

- Speed Index

- CPU consumption

- Memory Consumption

- Battery Consumption

- Network data (upload and download)

I am not entirely sure what a 'transaction' is, but it appears to be a user-definable span of time or sequence of app events.

I could not find more specific information in the docs about how this data is captured, and whether it is done so automatically as a part of an Appium test or whether it is a separate feature/interface. The best I can figure out, during test recording the users define start/stop points for performance 'transaction' recording, though this does not explain whether it is possible with Appium tests that you have brought yourself and did not develop in Experitest's 'Appium Studio' product.

Like AWS Device Farm, Experitest apparently has the ability to manually create network 'profiles' that can be applied to certain tests or projects.

Perfecto Mobile

Information retrieved from company website

Perfecto Mobile is probably the oldest company in the entire automated mobile testing space, and they've had a lot of time to build out their support for performance testing. It looks like Perfect focuses on network condition simulation via their concept of Personas, which are essentially the same types of network profiles we have seen in other places.

You can set the Persona you want your Appium test to use via a special desired capability. Given my perusal of the docs, it appears possible to set "points of interest" in your test, that you can use for verification later on. It wasn't clear, but it's possible that setting one of these "points of interest" might capture some specific performance data at that time for later review, however I couldn't find any specific mention of the types of performance metrics that Perfecto captures, if any.

In addition, I'm not quite sure what "Appium" means exactly for Perfecto. From their Appium landing page, we see this message:

Appium testing has limitations that may prevent it from being truly enterprise ready. Perfecto has re-implemented the Appium server so it can deliver stronger automated testing controls, mimic real user environments, and support the unique needs of today’s Global 1000 companies.

What this implies is that, if you're using Perfecto, you're not actually running the Appium open source project code. Whether that is a good or bad thing is up to you to decide, however I can only imagine that it could generate confusion as their internal reimplementation inevitably drifts from what the Appium community is doing! How easy is it to migrate Appium tests running on Perfecto to another cloud? If they're not using standard open source Appium servers, this could be a difficult question to answer. I'm a big fan of what Perfecto is trying to do with their product, but (as is probably not surprising given my role with the Appium project) I don't think it's beneficial for any cloud provider to "reimplement" Appium.

Other Products

Cloud services are not the only types of paid products used for mobile performance testing. We also have SDKs (which are built into the app under test), and standalone software used for various purposes. None of these have any special relationship with or support with Appium, so you're responsible for figuring out how to make them work in conjunction with your Appium test.

Charles Proxy

Information retrieved from company website

Charles Proxy is a networking debugging proxy that has special support for mobile devices. You can use it to inspect or adjust network traffic coming into or out of your devices. Charles comes with special VPN profiles you can install on your devices to get around issues with trusting Charles's SSL certificates, etc...

Charles's main features are: SSL proxying, bandwidth throttling (network conditioning), request replay, and request validation.

Firebase

Information retrieved from company website

Google's Firebase is a lot of things, apparently intended to be a sort of one-stop-shop especially for Android developers. It's got databases and auth services, analytics and distribution tools. Firebase doesn't have any officially supported Appium product, though they do support automated functional testing of Android apps (via Espresso and UiAutomator 2) and iOS apps (via XCTest) through their Test Lab product.

Of more interest for us today is their performance monitoring product. The way this works is by bundling in a particular SDK with your app which enables in-depth performance tracing viewable in the Firebase console. You start and stop traces using code that leverages the SDK in test versions of your app, and that's it. Of course, this means modifying your app under test, and I couldn't find any discussion of whether performance or network data is captured "from the outside" during test runs in the Firebase Test Lab.

New Relic

Information retrieved from company website

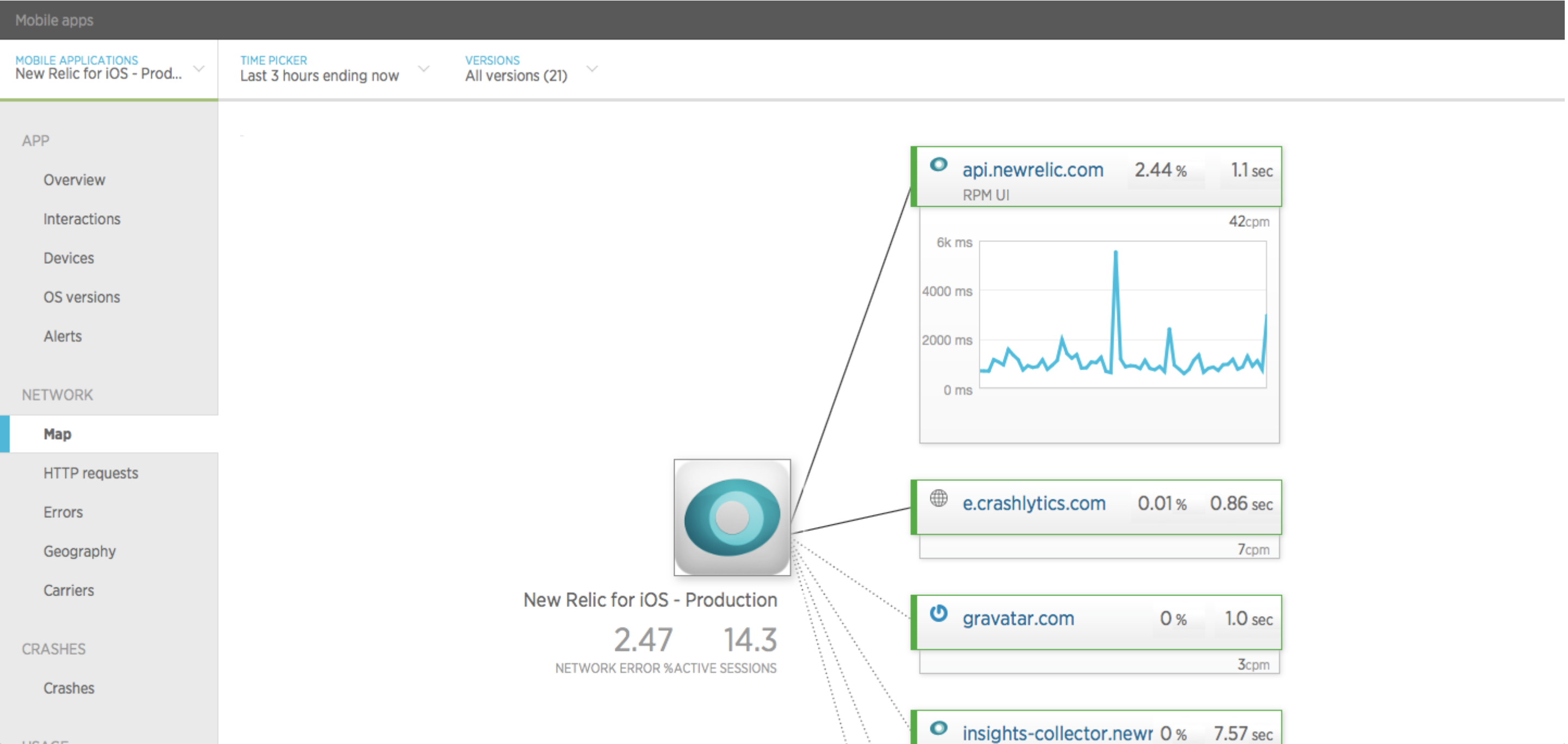

Another SDK-based option is New Relic Mobile. Embedding their SDK in your app enables tracking of a host of network and other performance data. Where New Relic appears to have an advantage is in showing the connections your app has with other New Relic-monitored services (backend sites, for example).

Otherwise, New Relic provides most of the same types of performance data you'd expect. They also give you a "User Interaction Summary" which appears to be similar to HeadSpin's high level detail of potential UX issues. In addition, metrics can be viewed in aggregate across device types, so it's possible to easily spot performance trends on the level of device type as well.

Since this is an SDK-based solution, you'll have to modify your app under test to gather the data. Presumably you could then use it in your Appium tests just fine!

Conclusion

In this article we examined a number of cloud services and other types of paid software used for performance testing. A small handful of these companies supports Appium as a first-class citizen, meaning you can use their cloud to run your Appium tests themselves, and potentially get a lot of useful performance data for free along with it.

In my mind, this is the best possible approach for Appium users--just write your functional tests the way a user would walk through your app, then run the tests on one of these platforms, and you'll get performance reports for free! (Or rather, for hopefully no additional fee). The best kind of performance test is obviously the one you didn't have to write explicitly yourself, but which still found useful information that can easily be acted on in order to improve the overall UX of your app.

Oh, and did I miss a good candidate for this article? It's entirely possible I did! This is a big and growing industry. Let me know about it and I'll update this list if I find a relevant product.